Why do we use similarity to gauge statistical probability?

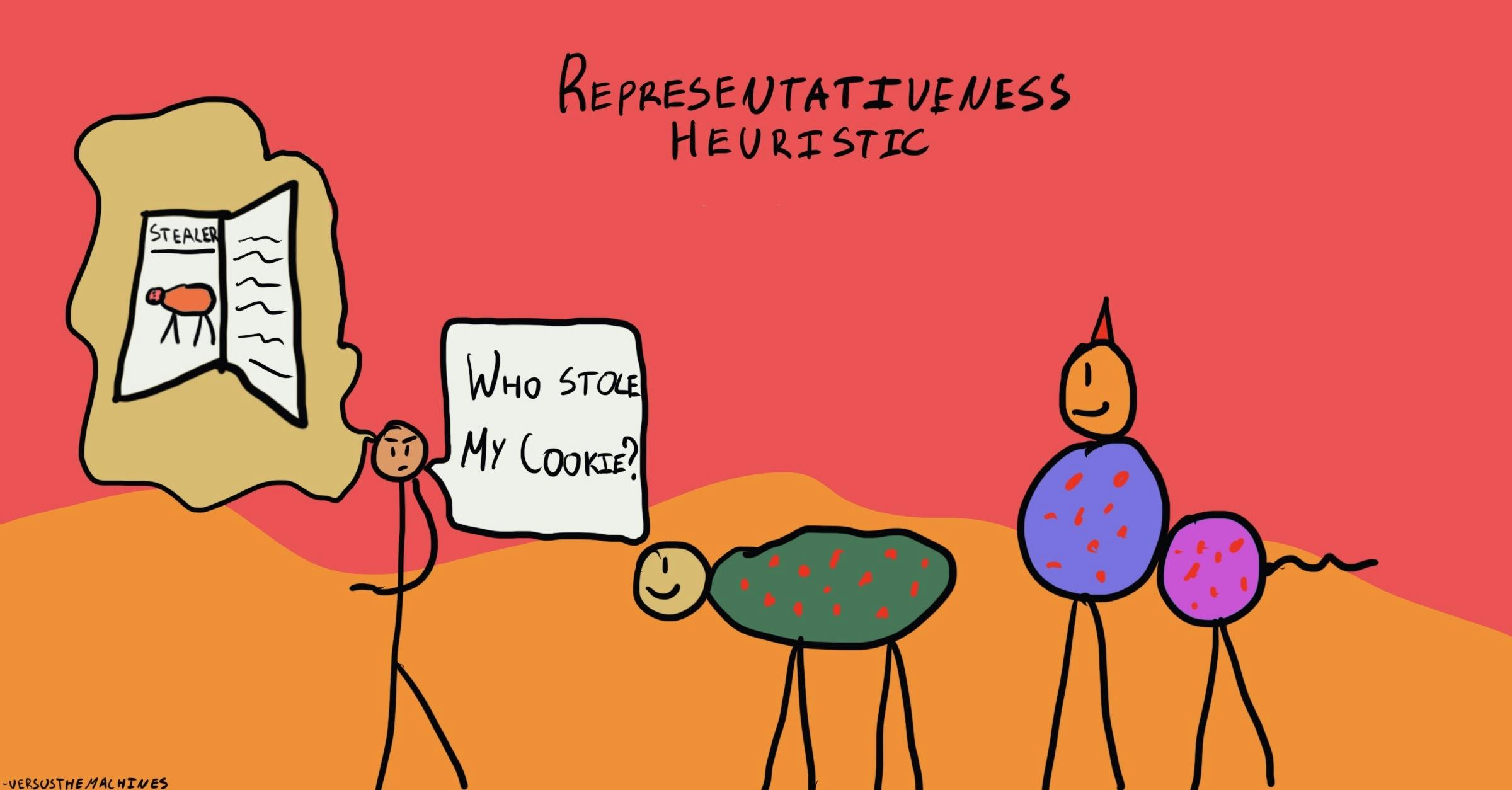

The Representativeness Heuristic

, explained.What is the Representativeness Heuristic?

The representativeness heuristic is a mental shortcut that we use when estimating probabilities. When we’re trying to assess how likely a certain event is, we often make our decision by assessing how similar it is to an existing mental prototype.

Where this bias occurs

Let’s say you’re going to a concert with your friend Sarah. She also invited her two friends, John and Adam, whom you’ve never met before. You know that one is a mathematician, while the other is a musician.

When you finally meet Sarah’s friends, you notice that John wears glasses and is a bit shy, while Adam is more outgoing and dressed in a band T-shirt and ripped jeans. Without asking, you assume that John must be the mathematician and Adam must be the musician. You later discover that you were mistaken: Adam does math, and John plays music.

Thanks to the representativeness heuristic, you guessed Adam and John’s jobs based on stereotypes surrounding how these careers typically dress. This reliance caused you to ignore better indicators of their professions, such as simply asking them what they do for a living.

Debias Your Organization

Most of us work & live in environments that aren’t optimized for solid decision-making. We work with organizations of all kinds to identify sources of cognitive bias & develop tailored solutions.

Related Biases

Individual effects

Since we tend to rely on representativeness, we often fail to consider other types of information, causing us to make poor predictions. The representativeness heuristic is so pervasive that many researchers believe it is the foundation of several other biases that affect our processing, including the conjunction fallacy and the gambler's fallacy.

The conjunction fallacy occurs when we assume multiple things are more likely to co-occur than a single thing on its own. Statistically speaking, this is never the case, but the representativeness heuristic may convince us so.

Take Lisa, who is a bright philosophy graduate deeply concerned with discrimination and social justice. When given the option, we are much more likely to guess that she is both an active feminist and a bank teller, rather than just a bank teller.6 This is because of representativeness: the fact that Linda resembles a prototypical feminist skews our ability to predict the probability of her career.

Another bias caused by the representativeness heuristic is the gambler’s fallacy, which causes people to apply long-term odds to short-term sequences. For example, in a coin toss, there is roughly a fifty-fifty chance of getting either heads or tails. This doesn’t mean that if you flip a coin twice, you’ll get heads one time and tails the other. The probability only works over long sequences, such as tossing a coin a hundred times. However, we believe that short-term odds should represent their long-term counterparts, even though this is almost never the case.7

As its name suggests, the gambler’s fallacy can have serious consequences for gamblers. For example, somebody may believe that their odds of winning are better if they’ve been on a short losing streak, even though it will take many more times losing to fulfill that probability.

Systemic effects

Our reliance on categories can easily tip over into prejudice, even if we don’t realize it. The way that mass media portrays minority groups often reinforces commonly-held stereotypes. For instance, Black men tend to be overrepresented in coverage of crime and poverty, while they are underrepresented as “talking head” experts.9 These patterns support a narrative that Black men are violent, which even Black viewers may internalize and incorporate into their categorization.

These stereotypes from the representativeness heuristic contribute to systemic discrimination. For example, police looking for a crime suspect might focus disproportionately on Black people in their search. Their prejudices cause them to assume that a Black person is more likely to be a criminal than somebody from another group.

How it affects product

Representativeness is a valuable tool for developing user interface (UI). Digital designers have intentionally incorporated symbols representing categories to guide us when we navigate virtual spaces, often without us even realizing it.

For example, when we see the trash bin icon, we know we can drag our documents over to dispose of them—just as we would throw out paper documents in real life. Or when we see a floppy disc icon, we know we can click on it to save our document, just as we used to store information. These prototypes are a good reminder of how the material can better help us understand the digital when designing new products.

The representativeness heuristic and AI

Machine learning has optimized categorization by relying on statistical patterns and base rates to sort information. However, humans still succumb to the representativeness heuristic while interpreting these outputs.

For example, the healthcare system has adopted AI technology to help diagnose patients by scanning medical images and running comparisons to thousands more in their dataset. Doctors may be more inclined to trust AI’s diagnosis if the symptoms match a disease's prototypical description. However, doctors might dismiss AI diagnoses if these do not align, even though the AI has much more access to rare or unusual presentations of symptoms in their files than doctors may have experienced in their careers.

Why it happens

The representativeness heuristic was coined by Daniel Kahneman and Amos Tversky, two of the most influential figures in behavioral economics. The classic example they used to illustrate this bias asks the reader to consider Steve: His friends describe him as “very shy and withdrawn, invariably helpful, but with little interest in people, or in the world of reality. A meek and tidy soul, he has a need for order and structure, and a passion for detail.” After reading this description, do you think Steve is a librarian or a farmer?2

Most of us intuitively feel like Steve must be a librarian because he’s more representative of our image of a librarian than he is our image of a farmer. In reality, no evidence directly points to Steve’s career, so we rely on stereotypes to dictate our decision.

Conserving energy with categories

As with all biases, the main reason we rely on representativeness is because we have limited mental resources. Since we make thousands of daily decisions, our brains are wired to conserve as much energy as possible. This means we often rely on shortcuts to quickly judge the world around us. However, there is another reason behind why the representativeness heuristic happens, rooted in how we perceive people and objects.

We draw on prototypes to make decisions

Grouping similar things together—that is, categorizing them—is an essential part of how we make sense of the world. This might seem like a no-brainer, but categories are more fundamental than many realize. Think of all the things you encounter in a single day. Whenever we interact with people, animals, or objects, we draw on the knowledge we’ve learned about that category to know what to do.

For instance, when you go to a dog park, you might see animals in a huge range of shapes, sizes, and colors. But since you can categorize them all as “dogs,” you immediately know what to expect: they run and chase things, like getting treats, and if one of them starts growling, you should probably back away.

Without categories, every time we encountered something new, we would have to learn what it was and how it worked from scratch. Not to mention the fact that storing so much information about every separate entity would be impossible given our limited cognitive capacity. For this reason, our ability to understand and remember things about the world relies on categorization.

On the flip side, the way we originally learned to categorize things can also affect how we perceive them.3 For example, in Russian, lighter and darker shades of blue have different names (“goluboy” and “siniy,” respectively), whereas in English, we refer to both as “blue.” Research reveals that this difference in categorization affects how people actually perceive the color blue: Russian speakers are faster at discriminating between light and dark blues compared to English speakers.4

According to one hypothesis of categorization known as prototype theory, we use unconscious mental statistics to figure out what the “average” member of a category looks like. When we are trying to make decisions about unfamiliar things or people, we refer to this average—the prototype—as a representative example of the entire category. There is some interesting evidence to support the idea that humans are somehow able to compute “average” category members like this. For instance, people tend to find faces more attractive the closer they are to the “average” face as generated by a computer.5

Prototypes guide our estimates about probability, just like in the example where we guessed Steve’s profession. Our prototype for librarians is probably somebody who resembles Steve quite closely—shy, neat, and nerdy—while our prototype for farmers is probably somebody more muscular, more down-to-earth, and less timid. Intuitively, we feel like Steve must be a librarian because we are bound to think in terms of categories and averages.

We overestimate the importance of similarity

The problem with the representativeness heuristic is that it doesn’t actually have anything to do with probability—and yet, we put more value on it than we do on relevant information. One such type of information is base rates: statistics revealing how common something is in the general population. For instance, in the United States there are many more farmers than there are librarians. This means that statistically speaking, it is incorrect that Steve is “more likely” to be a librarian, no matter what his personality is like or how he presents himself.2

Sample size is another useful type of information that we often neglect. When estimating a large population based on a sample, we want our sample to be as large as possible to give us a more complete picture. But when we focus too much on representativeness, sample size can end up being crowded out.

To illustrate this, imagine a jar filled with balls. ⅔ of the balls are one color, while ⅓ are another color. Sally draws five balls from the jar, of which four are red and one is white. James draws 20 balls, of which 12 are red and eight are white. Between Sally and James, who should feel more confident that the balls in the jar are ⅔ red and ⅓ white?

Most people say Sally has better odds of being right because the proportion of red balls she drew is larger than the proportion James drew. But this is incorrect: James drew a greater sample of balls than Sally, so he is in a better position to judge the contents of the jar. We are tempted to go for Sally’s 4:1 sample because it is more representative of the ratio we’re looking for than James’s 12:8, but this leads us to an error in our judgment.

Why it is important

Representation is essential for identification and interpretation. This way, we can understand something brand new without starting from square zero. Sometimes, this novelty exists within ourselves. For example, when exploring our gender or sexual identity, it may be comforting to identify with a new label to understand what we are going through. Other times, this novelty exists within others. For example, if your brother comes out as gay, we might rely on what we know about our queer friends to grasp his experience better.

However, there are two problems with only relying on strict categorization.

First, we may forget to consider uniqueness. Believe it or not, we can fall completely outside of categories—just like non-binary people, who do not feel their gender falls under any strict label. In situations like these, forcing categories onto someone might bring them further away from who they actually are, rather than guiding self-exploration.

Second, many categories have incorrect associations. Many groups are plagued with stereotypes, especially when it comes to minority ones such as LGBTQ+. This means that once we learn which category a person belongs to, we may be more likely to make wrong assumptions about them than correct ones.

Since the representativeness heuristic encourages us to neglect uniqueness and believe incorrect associations, we must learn to do more than blindly trust categories when making predictions.

How to avoid it

Since categorization is so fundamental to our perception of the world, it is impossible to avoid the representativeness heuristic altogether. However, awareness is a good start. Countless research demonstrates that when people become aware that they are using a heuristic, they often correct their initial judgment.10 Pointing out others’ reliance on representativeness, and asking them to do the same for you, provides useful feedback that might help to avoid this bias.

Other researchers have tried to reduce the effects of the representativeness heuristic by encouraging people to “think like statisticians.” These nudges do seem to help, but the problem is that without an obvious cue, people forget to use their statistical knowledge—not even those in academia.10

Another strategy with potentially more durability is formal training in logical thinking. In one study, children trained to think more logically were more likely to avoid the conjunction fallacy.10 With this in mind, learning more about statistics and critical thinking might help us avoid the representativeness heuristic.

How it all started

While categorization is a modern psychology staple, sorting objects can be traced all the way back to the Ancient Greeks philosophers. While Plato first touched on categories in his Statesman dialogue, it became a philosophical mainstay of his student, Aristotle. In his text accurately titled Categories, Aristotle aimed to sort every object of human apprehension into one of ten categories.

Prototype theory was empirically introduced by psychologist Eleanor Rosch in 1974. Up until this point, categories were thought of in all-or-nothing terms: either something belonged to a category, or it did not. Rosch’s approach recognized that members of a given category often look very different from one another, and that we tend to consider some things to be “better” category members than others. For example, when we think of the category of birds, penguins don’t seem to fit into this group as neatly as, say, a sparrow. The idea of prototypes lets us describe how we perceive certain category members as being more representative of their category than others.

At around the same time, Kahneman and Tversky introduced the concept of the representativeness heuristic as part of their research on strategies that people use to estimate probabilities in uncertain situations. Kahneman and Tversky played a pioneering role in behavioral economics, demonstrating that people make systematic errors in judgment because they rely on biased strategies, including the representativeness heuristic.

Example 1 – Represenativeness and stomach ulcers

Stomach ulcers are a relatively common ailment, but they can become serious if left untreated, sometimes even resulting in fatal stomach cancer. For a long time, it was common knowledge that stomach ulcers were caused by one thing: stress. So in the 1980s, when an Australian physician named Barry Marshall suggested at a medical conference that a kind of bacteria might cause ulcers, his colleagues initially rejected it out of hand.11 After being ignored, Marshall finally proved his suspicions using the only method ethically available to him: he took some of the bacteria of the gut of a sick patient, added it to a broth, and drank it himself. He soon developed a stomach ulcer, and other doctors were finally convinced.12

Why did it take so long (and such an extreme measure) to persuade others of this new possibility? According to social psychologists Thomas Gilovich and Kenneth Savitsky, the answer is the representativeness heuristic. The physical sensations people experience from a stomach ulcer—burning pains and a churning stomach—is similar to what we feel when we’re experiencing stress. On an intuitive level, we feel like ulcers and stress must have some connection. In other words, stress is a representative cause of an ulcer.11 This may have been why other medical professionals were so resistant to Marshall’s proposal.

Example 2 – Representativeness and astrology

Gilovich and Savitsky also argue that the representativeness heuristic plays a role in pseudoscientific beliefs, including astrology. In astrology, each zodiac sign is associated with specific traits. For example, Aries, a “fire sign” symbolized by the ram, is often said to be passionate, confident, impatient, and aggressive. The fact that this description meshes well with the prototypical ram is no coincidence: the personality types linked to each star sign were chosen because they represent that sign.11 The predictions that horoscopes make, rather than foretelling the future, are reverse-engineered based on what best fits with our image of each sign.

Summary

What it is

The representativeness heuristic is a mental shortcut that we use when deciding whether an object belongs to a class. Specifically, we tend to overemphasize the similarity or difference between the object and class to help us make this decision.

Why it happens

Our perception of people, animals, and objects relies heavily on categorization: grouping similar things together. Within each category exists is a prototype: the “average” member that best represents the category as a whole. When we use the representativeness heuristic, we compare something to our category prototype, and if they are similar, we instinctively believe there must be a connection.

Example 1 – Representativeness and stomach ulcers

When an Australian doctor discovered that a bacterium, and not stress, causes stomach ulcers, other medical professionals initially didn’t believe him because ulcers feel so similar to stress. In other words, stress is a more representative cause of an ulcer than bacteria are.

Example 2 – Representativeness and astrology

The personality types associated with each star sign in astrology are chosen because they are representative of the animal or symbol of that sign.

How to avoid it

To avoid the representativeness heuristic, learn more about statistics and logical thinking, and ask others to point out instances where you might be relying too much on representativeness.

Related TDL articles

Why We See Gambles As Certainties

The representativeness heuristic causes many other biases, including the gambling fallacy. This article explores the problem of gambling addiction and why it is so difficult to persuade people to stop.

TDL Perspectives: What Are Heuristics?

This interview with The Decision Lab’s Managing Director Sekoul Krastev delves into the history of heuristics, their applications in the real world, and their positive and negative effects.