Combining AI and Behavioral Science Responsibly

If you haven’t spent the last five years living under a rock, you’ve likely heard at least one way in which artificial intelligence (AI) is being applied to something important in your life. From determining the musical characteristics of a hit song for Grammy-nominated producers1 to training NASA’s Curiosity rover to better navigate its abstract Martian environment,2 AI is as useful as it is ubiquitous. Yet despite AI’s omnipresence, few truly understand what is going on under the hood of these complex algorithms — and, concerningly, few seem to care, even when it is directly impacting society. Take for example the United Kingdom, where one in three local councils are using AI to assist with public welfare decisions, ranging from deciding where kids go to school to investigating benefits claims for fraud.3

What is AI?

In simple terms, AI describes machines that are made to think and act human. Like us, AI machines can learn from their environments and take steps towards achieving their goals based on past experiences. Artificial intelligence was first coined as a term in 1956 by John McCarthy, a mathematics professor at Dartmouth College.4 McCarthy posited that every aspect of learning and other features of human intelligence can, in theory, be described so precisely that a machine can be made to mathematically simulate them.

Back in McCarthy’s era, AI was merely conjecture that was limited in scope to a series of brainstorming sessions by idealistic mathematicians. Now, it is undergoing a sort of renaissance due to massive advancements in computing power and the sheer amount of data at our fingertips.

While the post-human, dystopian depictions of advanced AI may seem far-fetched, one must keep in mind that AI, even in its current and relatively rudimentary form, is still a powerful tool that can be used to create tremendous good or bad for society. The stakes are even higher when behavioral science interventions make use of AI. Problematic outcomes can occur when the uses of these tools are obfuscated from the public under a shroud of technocracy — especially if AI machines develop the same biases as their human creators. There is evidence that this can occur, as researchers have even managed to deliberately implement cognitive biases into machine learning algorithms according to an article published in Nature in 2018.5

Behavioral Science, Democratized

We make 35,000 decisions each day, often in environments that aren’t conducive to making sound choices.

At TDL, we work with organizations in the public and private sectors—from new startups, to governments, to established players like the Gates Foundation—to debias decision-making and create better outcomes for everyone.

Machines that act like us

A term that is almost as much of a buzzword as AI is machine learning (ML), which is a subset of AI that describes systems that have the capability of learning automatically from experience, much like humans. ML is used extensively by social media platforms to predict the types of content that we are most likely to read, from the news articles that show up on our Facebook feeds to the videos that YouTube recommends to us. According to Facebook6, their use of ML is for “connecting people with the content and stories they care about most.”

Yet perhaps we only tend to care about the things that reinforce our beliefs. Analysis from McKinsey & Company argues that social media sites use ML algorithms to “[filter] news based on user preferences [and reinforce] natural confirmation bias in readers”.7 For social media giants, confirmation bias is a feature, not a bug.

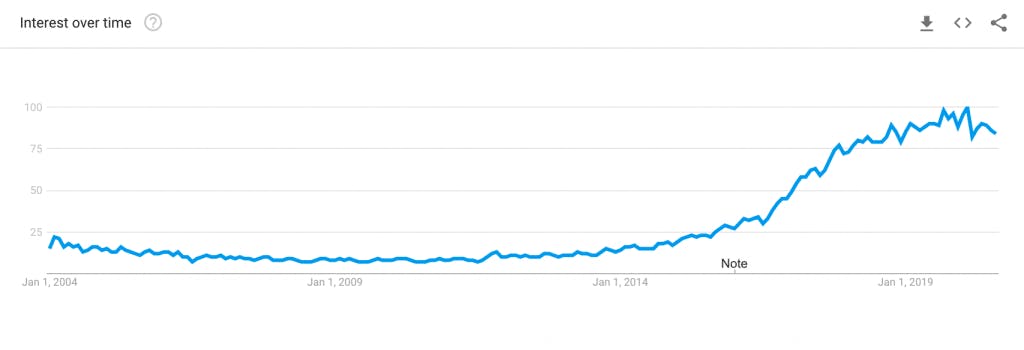

Worldwide Google searches for machine learning

Source: Google Trends

Despite concerns of ML-generated feedback loops that create ideological echo chambers on social media sites8 — which might indeed be an axiom that is built upon an incomplete view of individuals’ media diets, according to research from the Oxford Internet Institute9 — these (and many other) applications of ML are not inherently negative. Much of the time, it can be beneficial for us to be connected with the people and content that we care about the most. However, problematic uses of ML can cause bad outcomes: If we program machines to optimize for results that conform to our normative views and goals, they might do just that. AI machines are only as intelligent, rational, thoughtful, and unbiased as their creators. And, as the field of behavioral economics tells us, human rationality has its limits.

The AI Governance Challenge

When AI is used for the wrong reasons

The existence of biases does not necessarily mean we should slow down or stop our use of AI. Rather, we need to be mindful as we proceed so AI doesn’t become a sort of enigmatic black box over which we ostensibly have little control. Artificial intelligence and machine learning are simply tools we have at our disposal; it is up to us to decide how to use them responsibly. Special attention is required when we use a tool as powerful as ML — one that, when partnered with behavioral science, has the potential to exacerbate the biases that impact our decision making on an unprecedented scale. Bad outcomes of this partnership could include a reinforcement of biases we have towards marginalized individuals, or myopia towards equitable progress in the name of calculated optimization. Mediocre outcomes could include the use of ML-infused behavioral science interventions to sell us more stuff we don’t need or to bureaucratize our choice environments in a web of tedium. These tools could also encourage pernicious rent-seeking by uninspired businesses, leading to stifled innovation and lower competition.

Targeted nudges

Does any good lie at the intersection of ML and behavioral science? With an asterisk that strongly cautions against bad or mediocre uses — or the act of carelessly labelling ML as a faultless panacea — the answer is yes. Behavioral science solutions that are augmented with ML can better predict what interventions will work most effectively and for whom. ML can also allow us to create personalized nudges to better scale over large, heterogeneous populations.10 These personalized nudges could do wonders for addressing qualms about the external validity of randomized controlled trials, a type of experiment that is commonly used in behavioral science to determine which interventions work and to what degree. Idealistic daydreaming isn’t necessary to think of the many different pressing policy problems that could benefit from precise nudges. From predicting which messages will be the most salient to specific individuals, to personalized health recommendations based on our unique genetic makeup, many policy areas exist as suitable candidates for these kinds of interventions.

Going forward

The benefits of using ML to improve behavioral science applications may indeed outweigh the risks of creating bad outcomes — and, perhaps more pervasively, mediocre ones. In order for us to get it right, behavioral science must play a role in identifying and correcting the harmful biases that impact both our decisions and the decisions of our intelligent machines. When using AI, we must remain faithful to a key tenet of behavioral science: Interventions should influence our behavior so we can make better decisions for ourselves, all without interfering with our freedom of choice. Like their creators, intelligent machines can be bias-prone and imperfect. It is crucial that we remain aware of this as the marriage between behavioral science and AI matures so we can use these tools purposefully and ethically.

References

1. Marr, B. (2017, January 30). Grammy-nominee Alex Da kid creates hit record using machine learning. Forbes. https://www.forbes.com/sites/bernardmarr/2017/01/30/grammy-nominee-alex-da-kid-creates-hit-record-using-machine-learning/#79e589832cf9

2. NASA. (2020, June 12). NASA’s Mars Rover drivers need your help – NASA’s Mars exploration program. NASA’s Mars Exploration Program. https://mars.nasa.gov/news/8689/nasas-mars-rover-drivers-need-your-help/

3. Marsh, S. (2019, October 15). One in three councils using algorithms to make welfare decisions. the Guardian. https://www.theguardian.com/society/2019/oct/15/councils-using-algorithms-make-welfare-decisions-benefits

4. Nilsson, N. J. (2009). The quest for artificial intelligence. Cambridge University Press.

5. Taniguchi, H., Sato, H. & Shirakawa, T. A machine learning model with human cognitive biases capable of learning from small and biased datasets. Sci Rep 8, 7397 (2018). https://doi.org/10.1038/s41598-018-25679-z

6. Facebook. (2017, November 27). Machine learning. Facebook Research. https://research.fb.com/category/machine-learning/

7. Baer, T., & Kamalnath, V. (2017, November 10). Controlling machine-learning algorithms and their biases. McKinsey & Company. https://www.mckinsey.com/business-functions/risk/our-insights/controlling-machine-learning-algorithms-and-their-biases

8. Knight, M. (2018, February 10). Here’s why Facebook is such an awful echo chamber. Business Insider. https://www.businessinsider.com/facebook-is-an-echo-chamber-2018-2

9. Blank, G., & Dubois, E. (2018, March 9). The myth of the echo chamber. OII | Oxford Internet Institute. https://www.oii.ox.ac.uk/blog/the-myth-of-the-echo-chamber/

10. Hrnjic, E., & Tomczak, N. (2019, July 3). Machine learning and behavioral economics for personalized choice architecture. arXiv.org e-Print archive. https://arxiv.org/pdf/1907.02100.pdf

About the Author

Julian Hazell

Julian is passionate about understanding human behavior by analyzing the data behind the decisions that individuals make. He is also interested in communicating social science insights to the public, particularly at the intersection of behavioral science, microeconomics, and data science. Before joining The Decision Lab, he was an economics editor at Graphite Publications, a Montreal-based publication for creative and analytical thought. He has written about various economic topics ranging from carbon pricing to the impact of political institutions on economic performance. Julian graduated from McGill University with a Bachelor of Arts in Economics and Management.