To Be Right or Liked? Evaluating Political Decision-Making

In the era of Twitter mobs and polarizing pundits, it seems like we care a lot about figuring out the truth and expressing it – resoundingly. Ideological battles are constantly being waged in the halls of Congress, on our news channels, and in our Facebook feeds. We can share our worldview like never before, yet we often feel worlds apart when assessing our shared reality.

But if we care so much about being right, why do we argue so much when facts about contentious topics are readily available? If the data are gathered, shouldn’t we all be reaching the same conclusions?

Recognizing biases in how we attend to information

Unfortunately, we don’t care about the truth as much as we typically think. A large body of evidence suggests people will attend to political information in incredibly biased ways. Our highly social brains make discerning truth more difficult than we might hope because we often protect our previous beliefs rather than face inconvenient truths.

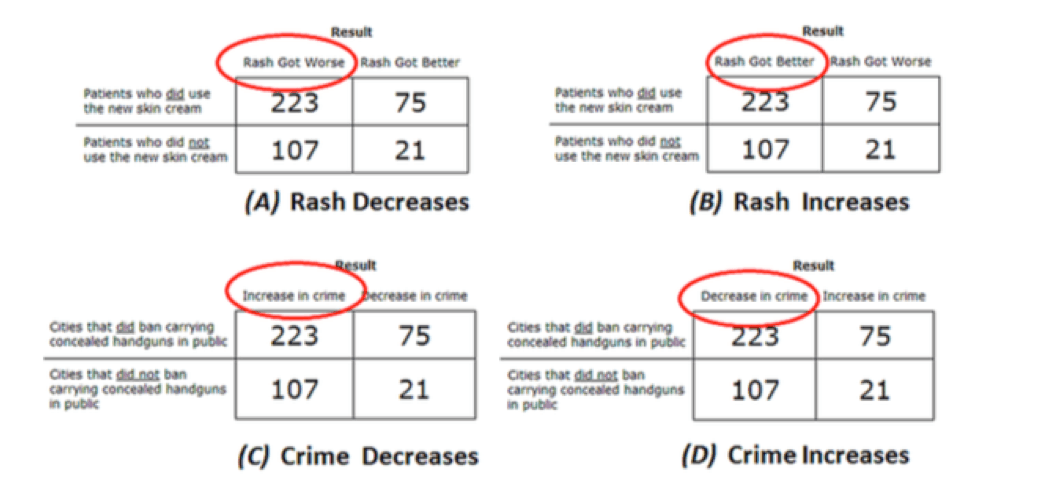

For instance, Kahan et al. (2013) tasked a nationally representative set of participants with a difficult numeracy problem. Quickly looking at the data could easily lead participants to the incorrect interpretation, for the intuitive answer was designed to be incorrect. Reaching the correct answer required participants think carefully about the data. Interestingly, people were less accurate in interpreting the same data when they believed the information came from a study on gun control than when it was about the supposed efficacy of a new skin cream.

(From Kahan et al., 2013)

In the skin cream conditions, accuracy was best predicted by participants’ previously established quantitative abilities: those with better numeracy skills were more likely to interpret the results correctly. Yet for those in the gun control conditions, interpretive accuracy was significantly predicted by whether or not the data affirmed participants’ previous beliefs. Conservatives were more accurate when the correct interpretation suggested that banning concealed guns increased crime, and liberals were more accurate when the correct interpretation suggested that banning concealed guns decreased crime.

Behavioral Science, Democratized

We make 35,000 decisions each day, often in environments that aren’t conducive to making sound choices.

At TDL, we work with organizations in the public and private sectors—from new startups, to governments, to established players like the Gates Foundation—to debias decision-making and create better outcomes for everyone.

Heuristic Thinking or Motivated Reasoning?

One popular theory for interpreting these and similar findings proposes that people rely too heavily on automatic, heuristic, System 1 thinking (Sunstein, 2005). Reasoning through difficult problems is hard and costly, so we often engage our intuitions and emotions to quickly guide us to the right decisions. Problems with this mode of thinking can manifest when people vote along party lines: we believe our party usually represents our values, so we may not notice when a party contradicts our personal positions (Cohen, 2003).

While this theory explains part of the picture of biased thinking, it doesn’t adequately explain the results of the Kahan et al. (2013) study. The intuitive, heuristic answer was designed to lead participants astray, so why were partisans more accurate when the counterintuitive, correct answer affirmed their previous beliefs?

An alternative theory based on research in motivated reasoning can clarify these results. Motivated reasoning theorists propose that we often reason with desired conclusions in mind and selectively recruit our mental faculties towards reaching those conclusions. Studies in this area find that quantity of information processing matters as much as the quality of reasoning. When System 1 thinking produces the conclusion we want, we stop thinking and move on; but when System 1 thinking produces an answer that challenges our previous assumptions, we look again and think more critically (System 2 reasoning) to try to figure out the answer (Ditto, 2009).

Understanding Politically Motivated Reasoning

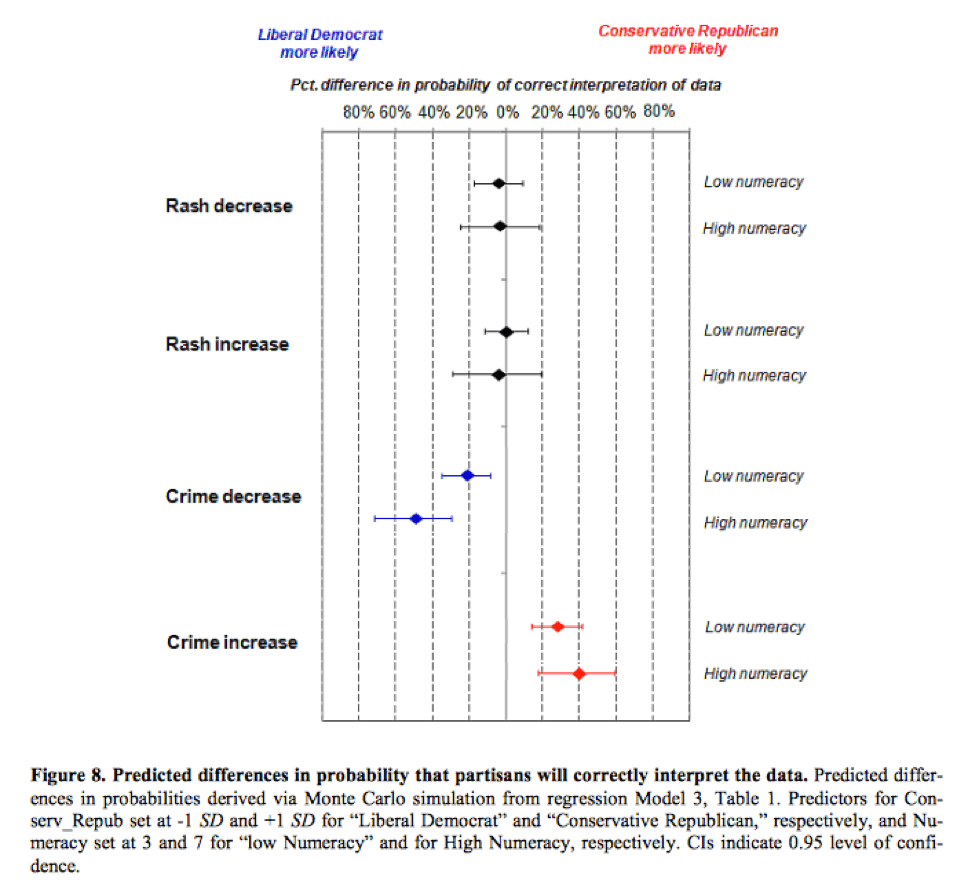

In support of this view, participants whose political assumptions were affirmed by the correct interpretation of the gun ban data were more likely to answer correctly than participants whose identities were affirmed by the incorrect heuristic assumption. This difference was 20% greater in those with the highest quantitative abilities, but no such differences emerged in the identity-neutral skin cream evaluations.

(From Kahan et al., 2013)

Essentially, highly skilled participants were more capable of reaching the correct interpretation when motivated to do so, but like everyone else they lacked the motivation to reason accurately about a charged topic unless their political beliefs were being challenged.

If the people most capable of accurately interpreting data are at least as biased as the rest of us, how can we hope to find common ground on our most pressing and divisive issues?

Understanding motivations and incentives at play

To start, we need to understand the motivations and incentives at play.

Part of why these results emerge is that it is often rational for individuals to believe in incorrect party platitudes. Most of us have little influence on policymaking processes, but expressing our political beliefs serves a social and psychological function even when we cannot tangibly impact policy. Everyone wants to be right, but practically speaking it’s usually more important for us to be socially accepted and internally consistent in our beliefs.

Thus, the accuracy of our views often doesn’t matter as much as our ability to signal our moral and political positions to others. Like the participants in this study, we have comparatively little external motivation to be correct. These separate motivations rarely conflict in everyday life, but when they do we often favor protecting our established identities and assumptions over acknowledging difficult truths.

Realigning Our Motivations

With this in mind, researchers have found that affirming individuals in non-political domains can increase participants’ acceptance of identity-inconsistent political information (Cohen, Aronson & Steele, 2000; Cohen & Sherman, 2014). Individuals who reflect on their personal values in an apolitical exercise process political information in a more balanced way, focusing on the strength of alternative arguments in a less biased manner (Correll, Spencer, & Zanna, 2004).

Preliminary evidence also suggests that monetary incentives can increase the accuracy of partisans’ responses to uncongenial findings (Khana & Sood, working). The individual rationality of incorrect beliefs leads to political dysfunction at the collective level, but finding ways to incentivize interpretive accuracy might lead to institutional initiatives to reduce bias.

The AI Governance Challenge

These promising findings, however, do not yield any obvious, practical policy remedies to our pervasive political biases. Citizens, social scientists, and policymakers will need to collaborate in order to test and implement solutions to get beyond the current state of political gridlock.

Nevertheless, it is important for citizens to recognize that our political affiliations influence – not merely represent – our political behaviors. Until we find systematic ways to improve our reasoning, we can all take steps towards improving political discourse by making efforts to challenge our personal beliefs and biases. Our values are more meaningful than their political utility, so if you stumble into a heated debate remember that the search for community, happiness, and truth transcends partisan boundaries.

About the Author

Jared Celniker

Jared is a PhD student in social psychology and a National Science Foundation Graduate Research Fellow at the University of California, Irvine. He studies political and moral decision-making and believes that psychological insights can help improve political discourse and policymaking.