TDL Brief: What’s next for polling?

On Tuesday night, Nov. 3, as election results started to roll in, it became clear that the U.S. presidential race was going to be closer than many polls and models had previously suggested.

Media outlets concluded quickly that there had been a gigantic polling misfire, even worse than in 2016. The Washington Post published an article entitled “The polling industry can’t sweep its failure under the rug,” and The Conversation wrote that the 2020 election was “An embarrassing failure for election pollsters,” to name a few.

“To all the pollsters out there, you have no idea what you’re doing.”

— Lindsey Graham, after rather easily winning re-election to the Senate despite pre-election polls indicating a much closer race.

Pollsters and forecasters argue that it’s premature to diagnose the polls as wildly incorrect. In many states—including battleground states such as Arizona and Georgia—the polls turned out to be quite accurate. And to be fair, FiveThirtyEight’s final projections gave Biden less than a 1-in-3 chance of a landslide. Projections for the popular vote are likely to be pretty accurate.

But, there were definitely significant misses at the state and district level—for Wisconsin, Michigan, Ohio, and Florida, in particular. Polling for senate races also projected more competitive races in Iowa, Maine, and South Carolina than the ones we actually saw.

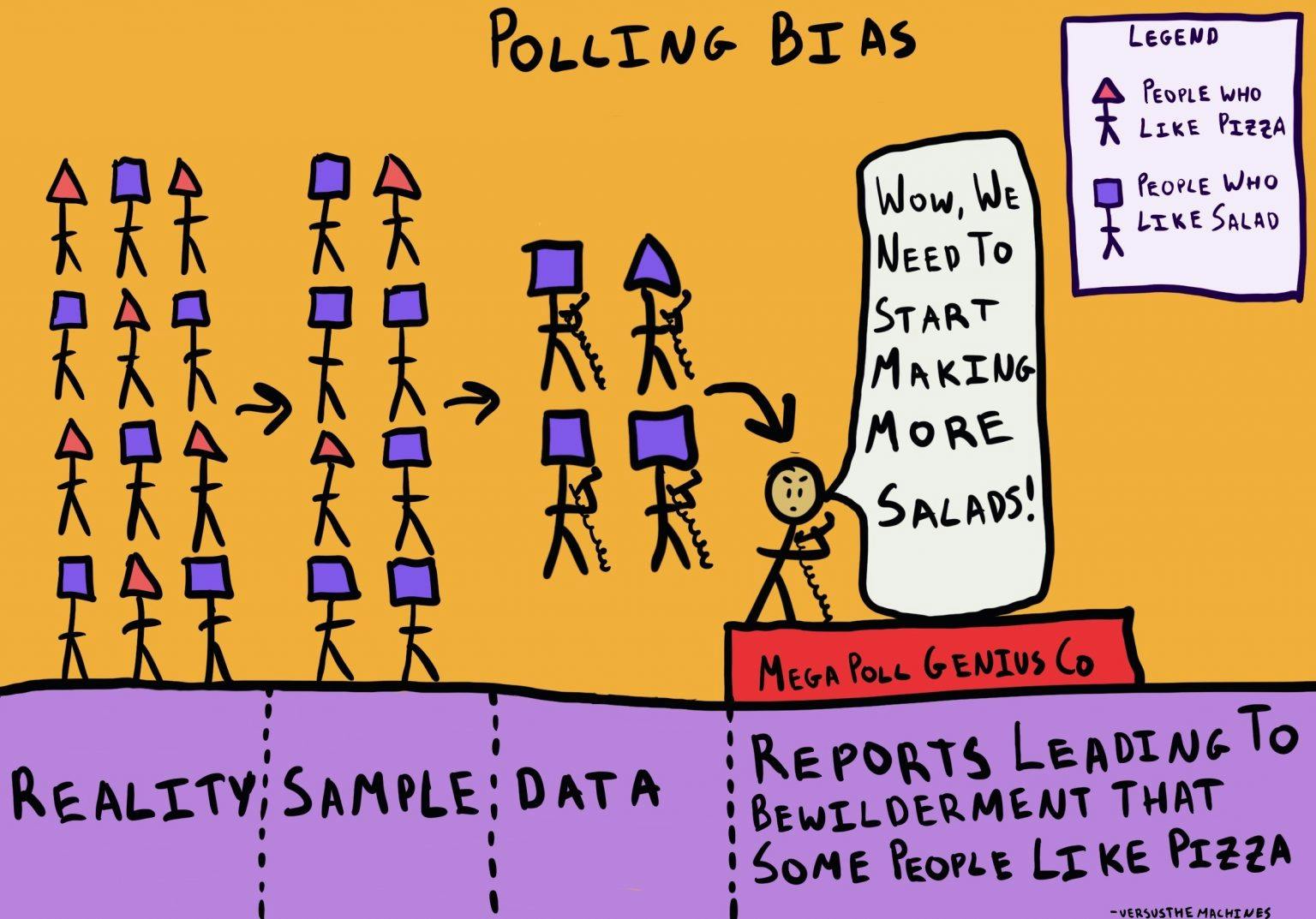

And when you look at the polling misses in both 2016 and 2020, the errors don’t seem random: they tend to come out in favor of the Republican Party. That is, there aren’t really any states where polls suggested that the Republican candidate would win, which were in fact won by the Democrat. The polling errors seem to go only in one direction. Moreover, the polls seem to miss not only in the same direction nationwide, but in the same states.

In light of this, TDL has compiled a list of hypotheses that may shed light on what, if anything, went wrong, and where to go from here.

Tom Spiegler, TDL Managing Director

How can we help?

TDL is a socially conscious consulting firm. Our mission is to translate insights from behavioral research into practical, scalable solutions—ones that create better outcomes for everyone.

1. The “shy voter” hypothesis.

By: The Conversation, “Voters’ embarrassment and fear of social stigma messed with pollsters’ predictions” (November 2016)

This was one of the theories floated after the 2016 U.S. election, and one that was mostly dismissed by pollsters at the time. However, many are paying more attention to the “shy voter” hypothesis this time around.

The argument goes like this: People are typically proud to vote for the candidate of their choice. Under normal conditions, when citizens are approached by pollsters about their voting intentions, the assumption is they’ll respond truthfully.

However, in certain circumstances, societal pressures may cause a voter to withhold their true preference from pollsters. If the norm in a voter’s social milieu is to vote for Candidate X, a vote for Candidate Y would defy that social norm. The less anonymous the polling method is, the more social pressures and norms can lead the “shy voter” to lie about their political preferences.

There is some research to support the idea that even actions carried out in private can lead to embarrassment. For instance, buying Viagra, even in the privacy of one’s home, can lead some people to feel ashamed, if they believe that unenhanced sexual performance is the social norm.

The “shy voter” hypothesis is particularly apt for polls conducted over the phone, which is a less anonymous venue than an online survey. Because any embarrassment is likely to be more acute over the phone, these kinds of polling methods may disproportionately run the risk of undercounting support for a particular candidate.

2. Distrusting voters may self-select out of polls.

By: Wired, “So How Wrong Were the Polls This Year, Really?” (November 2020)

Another hypothesis is that those with a distrust of the media and political institutions are less likely to answer a poll in the first place.

Take a pre-election poll with 500 responses: 200 for Candidate X and 300 for Candidate Y.

500 people is a reasonably large sample size, and looking at this poll, you might be confident that Candidate Y has a comfortable lead. But response rates are often 20% or lower. So now picture 10,000 people, 300 of whom called their vote for Candidate Y, 200 for Candidate X, and 9,500 who never responded. How confident are you feeling now?

“A big worry in political polling is that there are a group of people—distrustful of the media, academia, science, etc.—who do not want to engage with pollsters and survey research,” said Neil Malhotra, a professor of political economy at Stanford.

Pollsters try to adjust for those shortcomings with various methodologies, but it’s impossible to verify their accuracy.

3. Confirmation bias among pollsters and forecasters.

By: Statistical Modeling, Causal Inference, and Social Science Forum (Columbia University) (November 2020)

The consensus after the 2016 U.S. election was that the pollsters got it largely wrong. Going into 2020, pollsters were confident that the problems of 2016 had been accounted for, and that the 2020 polls were more likely to be right.

But with the 2020 election in the rear-view mirror, it seems as though the polling error this time around looked similar to that from 2016, prompting the question: did pollsters repeat the same mistakes? And if so, why and how?

One explanation for the persisting error is confirmation bias.

Confirmation bias is a cognitive shortcut that describes our underlying tendency to notice, focus on, and give greater credence to evidence that fits with our existing beliefs.

Pollsters are human, and are likely to have their own ideological and political preferences, which might lead pollsters to overlook possible errors in the polling if it skews toward the candidate or party that they prefer.

For example, if you favor Candidate X in the upcoming election, and the polls are coming out in a way that is consistent with your beliefs of how the election should go, you will be less likely to question these polling results than if you expected Candidate Y to be doing well.

4. Polls are frequently misinterpreted and misreported.

By: Laura Santhanam, “How to read the polls in 2020 and avoid the mistakes of 2016” (October 2020)

One hypothesis as to why the polls seem wrong is because we, as consumers, expect too much from them. There is a tendency among the media and general public to see polls as crystal balls, rather than surveys that represent a range of possible outcomes, affected by a number of variables that pollsters cannot control.

According to Courtney Kennedy, who directs survey research at Pew Research Center, polls are “not designed to predict elections”—even though many of us tend to perceive them that way. When we look to pollsters as “election oracles,” we are setting ourselves up for disappointment when their forecasts diverge from reality.

It is human nature to want to foresee and predict the future. People try to predict the future in other ways, like the stock market, with varying degrees of success, but clearly, predictions of human behavior can never be 100% certain. The experts may have better predictions and models than most of us “normal” people, but as we can see, expert models and analyses are never perfect either—and we should not be treating them as such.

About the Author

The Decision Lab

The Decision Lab is a Canadian think-tank dedicated to democratizing behavioral science through research and analysis. We apply behavioral science to create social good in the public and private sectors.