The “Social Dilemma” Dilemma

I remember how it happened. I woke up one morning to see a deluge of content on my social media feeds, all about how manipulative social media was. Office Slack channels at the tech startup where I work were abuzz with discussions of the evil tech industry. A few buzzwords were thrown around in conversation—dopamine, data harvesting, algorithms, dark patterns. Apparently, Netflix had released a docudrama called The Social Dilemma, exploring the dangerous impacts of social networking, and the misuse of data and deceptive design to manipulate people.

I just had to know more. But how could I?

Check out any time you like, but you can never…

A few weeks before this, I had decided to uninstall Netflix from my phone. I didn’t want the app sitting there as an easy getaway to bottomless entertainment. Much to my amusement, I learned that Netflix was a stock app on my phone, which means the manufacturer and Netflix had decided that the app was to be a permanent fixture on my device.

In other words: You can disable it anytime you like, but you can never leave!

The irony of all this was too much for me. Learning about a documentary on the harmful effects of social media, via social media. Watching a documentary about ethical design on a streaming service that doesn’t give me the autonomy to delete their app. Finishing the documentary and then being immediately recommended 10 other similar shows I would love, by the very algorithms that the documentary has just condemned.

As a behavioral scientist, I should have known better than to be surprised.

The problem is, if Robinson Crusoe had spotted a functional boat, he would have taken it and gotten the hell off the island. For the unacquainted, Robinson Crusoe is the protagonist of a novel by the same name, first published in 1917, telling the story of a castaway who spent 28 years on a deserted island before being rescued.1

Businesses are like Robinson Crusoe on the island: stranded. With financials that need to look fat and healthy, leaky customer funnels that need to be fixed, investors who expect returns, and customers who want the best, corporations are desperate for any solution that will help them stay afloat.

For many businesses, a boat appeared in the form of behavioral design. All these shortcuts that seem to help solve some of their problems, delivering higher customer engagement, more returning customers, and solving for all the metrics they cared about. Why wouldn’t they take advantage?

I am not justifying the use of manipulation, but merely making the case that the knowledge of behavioral science that is out there already is, to use a cliché, like toothpaste out of a tube. It cannot be taken back, and as long as it is out there without any guardrails, it will be misused.

Which brings me to the next point: Who is responsible for the ethics in the private sector?

Behavioral Science, Democratized

We make 35,000 decisions each day, often in environments that aren’t conducive to making sound choices.

At TDL, we work with organizations in the public and private sectors—from new startups, to governments, to established players like the Gates Foundation—to debias decision-making and create better outcomes for everyone.

The Gatekeepers of Ethics

In April 2019, in the US, Sens. Mark Warner and Deb Fischer introduced the DETOUR (Deceptive Experiences To Online Users Reduction) Act. The purpose of this Act is “To prohibit the usage of exploitative and deceptive practices by large online operators and to promote consumer welfare in the use of behavioral research by such providers.”2

The main text of the bills reads:

“(1) IN GENERAL.—It shall be unlawful for any large online operator—

(A) to design, modify, or manipulate a user interface with the purpose or substantial effect of obscuring, subverting, or impairing user autonomy, decision-making, or choice to obtain consent or user data;

(B) to subdivide or segment consumers of online services into groups for the purposes of behavioral or psychological experiments or studies, except with the informed consent of each user involved; or

(C) to design, modify, or manipulate a user interface on a website or online service, or portion thereof, that is directed to an individual under the age of 13, with the purpose of substantial effect or cultivating compulsive usage, including video auto-play functions initiated without the consent of a user.”

In other words, if approved, this bill would ban platforms from designing user interfaces to manipulate a user’s decision-making process. It would also ban A/B testing (a kind of randomized testing where developers roll out separate versions of a feature to different users and compare their responses), and make several tactics used by tech companies, such as auto-play of video ads, unlawful.3

To implement this, the Act proposes a Professional Standards Body, composed of representatives from the tech industry and elsewhere, to lay down “bright-line” rules and guidelines. In addition, an Independent Review Board would be established, which would be the go-to authority for approvals on any behavioral research based on user activity or user data. A violation of the guidelines would be an offence under the Federal Trade Commission Act.

This act, if approved, would mark the first attempt by any government to regulate the tech world at a design or product level.

This is a great first step, hopefully of many more to come. But, it still leaves many gaping holes. Where and how do we draw the line between what is manipulation and what is not? Does this mean even marketing and advertising are manipulative? These are all questions that do not have clear answers.

Another approach to encouraging ethical design is to somehow align doing so with corporate interests. I recently heard the amazing David Evans, Senior Research Manager at Microsoft, speak at a conference, where he made an important statement: “Any ethic of behavioral design has to have, built into it, a business incentive. Otherwise, it’s just simply moralizing.”4

Now, that’s the sound of a hammer hitting the nail on the head. How do we build business incentives for ethical design? One might argue that if enough customers are aware of these manipulations, market forces will act, and customers will stop using the app. But in a battle between convenience and ethics, the scales might be tilted just a little bit in favour of convenience. Previous research shows that even for “conscious consumers,” several economic and social factors (such as family, convenience, and price) weigh heavily on their choice of products, often leading them to decide against more ethical options.5

One way could be to make ratings of apps on app stores more granular and ethics-focused: Is this app useful? Is this app suitable for all age groups? Does this app’s design use “dark patterns” to deceive the user?

As you can see, there are many questions and I cannot claim to have the answers. However, as a practicing behavioral scientist, I do believe there is hope.

What behavioral scientists can do

While we’re still trying to answer these difficult questions about legislation & business incentives for ethical design, here are some things that you can do at your own organization. In my few years as a behavioral scientist, I have set down a few rules for myself and for anyone who takes my advice. These are:

There will be no gatekeeper other than yourself. The one person most distressed about the misuse of dynamite in war was its inventor, Alfred Nobel himself, because as the creator he knew how harmful it was. Similarly, no one knows the side effects of the misuse of behavioral science better than behavioral scientists do. We didn’t need a Netflix documentary to understand the risks.

Instead of waiting for it to be called out, be the gatekeeper of ethics in your organization. Create an ethics charter. Socialize it. Let everyone know this is important. I have never ended a single workshop I have conducted without a slide on ethics. In tech product jargon, it is not a “nice-to-have,” it is a “must-have.”

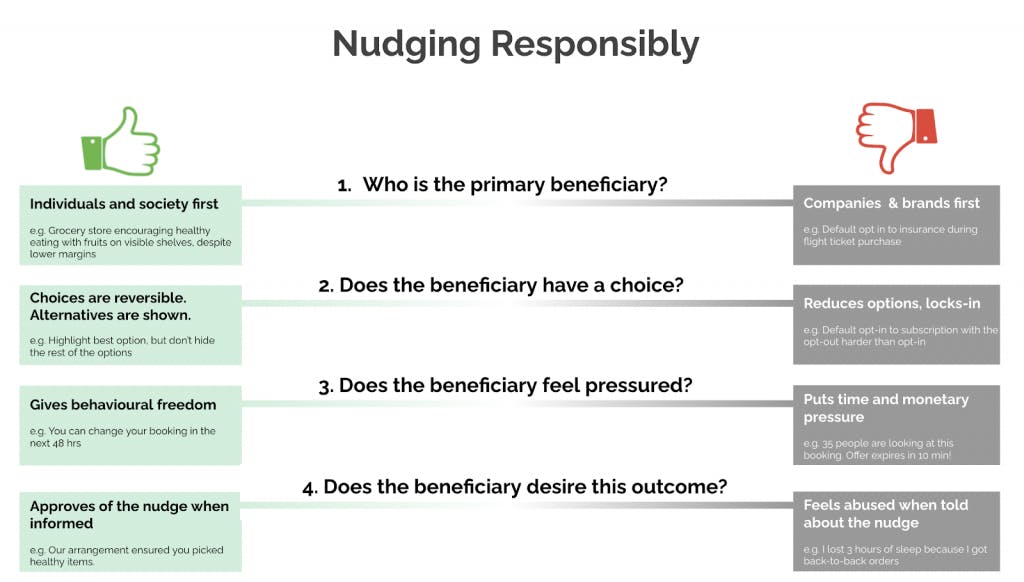

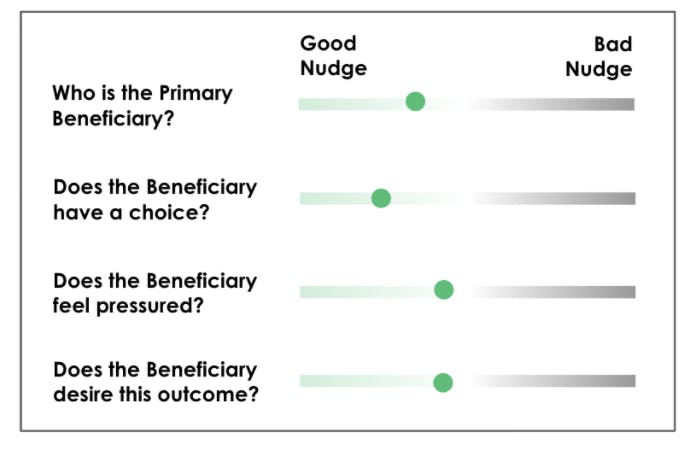

Make a simple ethics checklist. There are many ethics guidelines available on the internet. Adopt any of them. Early in my career, I stumbled upon a 4-question checklist, which, with minor changes, satisfied all my needs. I have stuck with that ever since. (I have to apologize here: I adopted this checklist so long ago that I am unable to find a reference for it. But if anyone knows the origins of this framework, please feel free to reach out to me and I would be more than happy to give credit where it’s due.)

Ask these 4 questions for every design feature you make.

Ethics must be part of the process. The product process is long—user research, design, engineering, marketing. But it’s never long enough that it would be impossible to accommodate ethics. Make sure getting approval on the ethics checklist is a part of the product process. Make it easy to use so that there is no friction in its adoption. Here’s a templatized version of the above checklist:

When a product fails on the checklist, initiate a discussion with the team. What are the compromises the business is willing to make? Will the negative impact on customers be worth the risk? If not, what are the changes that need to be made?

In an ideal world, upholding ethics should not merely be the duty of behavioral scientists, but that of the entire product world: product managers, designers, business managers. But it has to start somewhere, and where better to start than by cleaning your own house? Hopefully, over the years, just like Design Thinking, Ethical Behavioral Design will become an established process.

‘Til then, we fight for ethics, one red notification at a time!

References

1. Wikipedia. (n.d.). Robinson Crusoe. Wikipedia, the free encyclopedia. Retrieved October 30, 2020, from https://en.wikipedia.org/wiki/Robinson_Crusoe

2. US Congress. (2019, April 9). Text – S.1084 – 116th Congress (2019-2020): Deceptive experiences to online users reduction act. Library of Congress. https://www.congress.gov/bill/116th-congress/senate-bill/1084/text

3. Kelly, M. (2019, April 9). Big tech’s ‘dark patterns’ could be outlawed under new Senate bill. The Verge. https://www.theverge.com/2019/4/9/18302199/big-tech-dark-patterns-senate-bill-detour-act-facebook-google-amazon-twitter

4. David Evans. (2019, March 11). Evans DC Ethics of Behavioral Design [Video]. YouTube. https://youtu.be/KvHRokv63-0

5. Szmigin, I., Carrigan, M., & McEachern, M. G. (2009). The conscious consumer: taking a flexible approach to ethical behaviour. International Journal of Consumer Studies, 33(2), 224-231.

About the Author

Preeti Kotamarthi

Preeti Kotamarthi is the Behavioral Science Lead at Grab, the leading ride-hailing and mobile payments app in South East Asia. She has set up the behavioral practice at the company, helping product and design teams understand customer behavior and build better products. She completed her Masters in Behavioral Science from the London School of Economics and her MBA in Marketing from FMS Delhi. With more than 6 years of experience in the consumer products space, she has worked in a range of functions, from strategy and marketing to consulting for startups, including co-founding a startup in the rural space in India. Her main interest lies in popularizing behavioral design and making it a part of the product conceptualization process.