I have a rule about using Netflix in my household: We watch dark, scary shows on my partner’s account, and humorous sitcoms on mine.

I claim that this is to maintain an easy differentiation for the recommendation algorithm and so that, depending on our mood, we can pick the relevant account and start watching right away. But to be honest, the real reason I insist on this separation is that it makes me feel good about beating Netflix at its own game.

This way, as far as Netflix knows, I am a bright person with a sunny disposition, who only watches positive, uplifting comedies of 20-minute durations, hardly ever binge-watches, and will happily return to old favorites such as Modern Family and Friends every few months. My partner, on the other hand, is a dark personality who watches crime shows and thrillers (sometimes through the night), loves getting into the minds of psycho killers, and will consume anything that matches this description.

But who are we really? Well, I am not spilling the beans here and I definitely don’t intend to solve this mystery for Netflix.

Me 1, Netflix 0. Or so I think.

But who else do I hide my true self from? My fitness app? My grocery shopping app? Amazon? Spotify? As more and more platforms go down the path of using data to personalize the customer experience, this cat-and-mouse game will only get more interesting.

Why does personalization work? What are its limits? How does psychology make an appearance in this complicated tech story? In this article, I’ll be breaking this down.

Les sciences du comportement, démocratisées

Nous prenons 35 000 décisions par jour, souvent dans des environnements qui ne sont pas propices à des choix judicieux.

Chez TDL, nous travaillons avec des organisations des secteurs public et privé, qu'il s'agisse de nouvelles start-ups, de gouvernements ou d'acteurs établis comme la Fondation Gates, pour débrider la prise de décision et créer de meilleurs résultats pour tout le monde.

The complex world of personalization

Personalization refers to the use of historical data of a consumer to curate their experience on a platform, making it more customized. We see this everywhere—for instance, when you open an app and it starts by greeting you with your first name, shows you recommendations based on your past purchases, and convinces you to buy something by giving you a discount on exactly the thing you wanted. Or when you open a music app and there’s a playlist for the somber mood you’re currently in.

Most tech companies today rely heavily on personalization technology. And rightly so: it leads to more customer engagement and more revenues. The numbers speak for themselves:

- 75% of content watched on Netflix are based on the platform’s recommendations.1

- 50% of listening time on Spotify comes from personalized playlists created using such technologies.2

- 70% of time spent endlessly scrolling through Youtube videos comes from intelligent recommendations.3

- 35% of products bought on Amazon were recommended by the algorithm.4

And let’s face it: as much as I might try to hide my true self from Netflix, a personalized experience makes me feel good. As per an Accenture survey, a whopping 91% of consumers are more likely to shop with brands that recognize them, remember them, and provide relevant offers and recommendations.5 On top of that, 83% of consumers are willing to share their data to enable a personalized experience.

Image courtesy of The Marketoonist

You may be wondering: If users want personalization, then what’s the problem? The problem is that personalization is a bit like walking a tightrope. A very thin line separates the “good” kind of personalization from the creepy kind.

“I like it because it’s so similar to me” can easily become “I don’t like it because it’s eerily similar to me.”

“This is relevant to me and saves me time and effort” can easily become “The algorithm is stereotyping me and that’s not cool.”

This switch from good to bad is where user psychology comes in. Understanding the real reason why personalization works can help us understand why it does not work sometimes.

When does personalization really work?

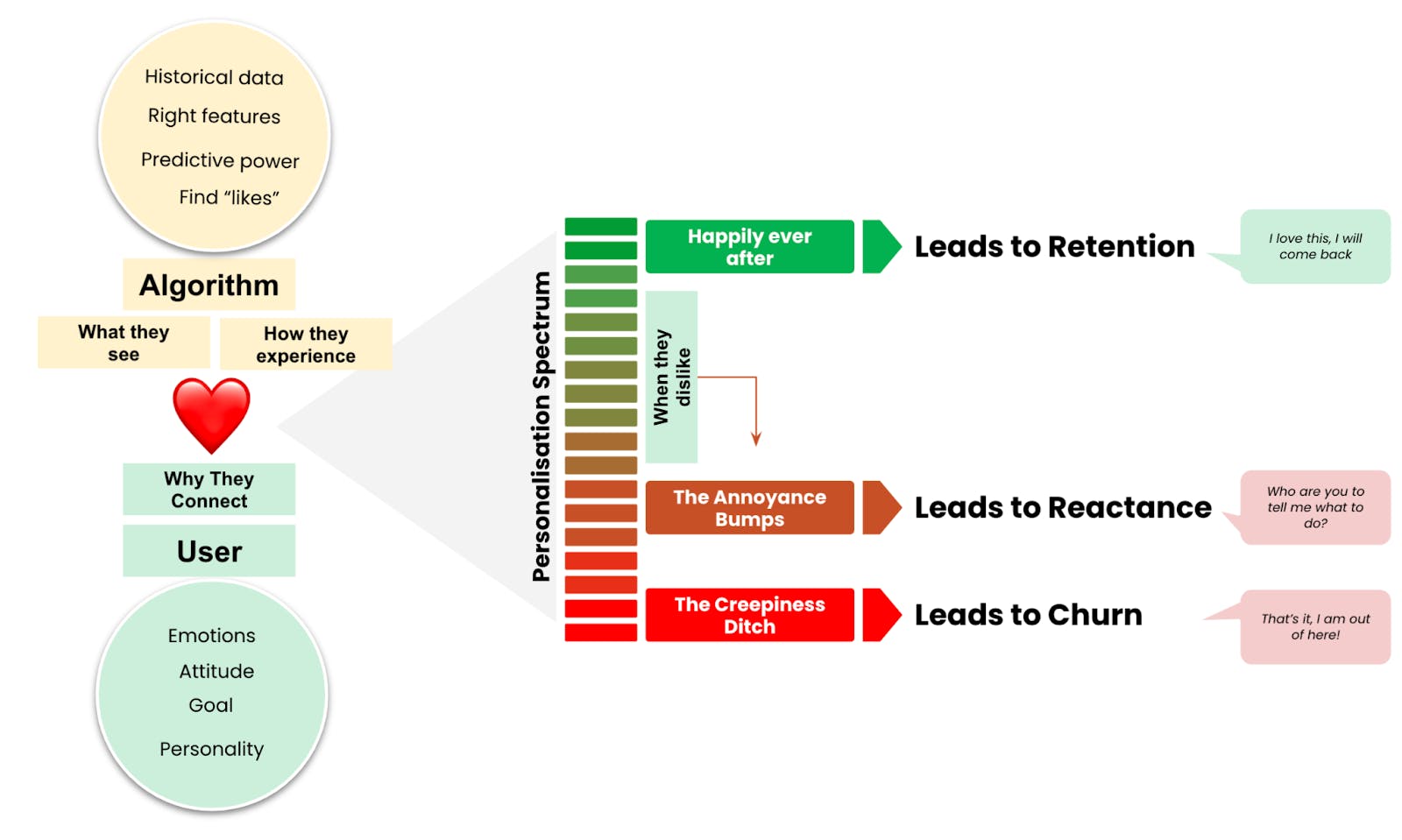

If you ask a tech person about the science behind personalization algorithms, they will tell you something along these lines: Once you have sufficient historical data about consumers, you can create a model and find the features that best predict the user’s behavior. We finalize a model with high predictive power, use that to find similar consumers in our set, and aggregate their behaviors. All of this put together helps us predict a user’s behavior and show the right recommendations.

Well, they are right. But the only thing they missed is the user—the actual person. When does a user want something that’s personalized for them? Turns out, quite a few ducks have to arrange themselves in a row for a user to like what has been personalized for them. Here are just a few to get you started on this:

1. Emotion match: Consumers operate in different emotional states, and this impacts their perception of the context. Emotions include psychological arousal (such as “peak” or extreme emotions like anger, worry, and awe), general mood valence (feeling happy or sad), and active thinking style (positive or negative).

A study of New York Times headlines showed that content that evokes high-arousal positive emotions (e.g. awe) or negative emotions (e.g. anger or anxiety) gets shared the most, indicating a “match” with the reader.7 In other words, an algorithm will work best when it somehow matches the contextual emotional state of the customer.

2. Attitude match: Consumers have different attitudes towards different things, which means it could also color how they make decisions. Types of attitudes could include a preference for facts vs a preference for emotions; moral attitudes, such as core principles and beliefs; political attitudes; and so on. An experimental study showed that emotional ads work well for those with a high need for affect, whereas cognitive ads (which share facts and information) worked well for individuals with a high need for facts.8

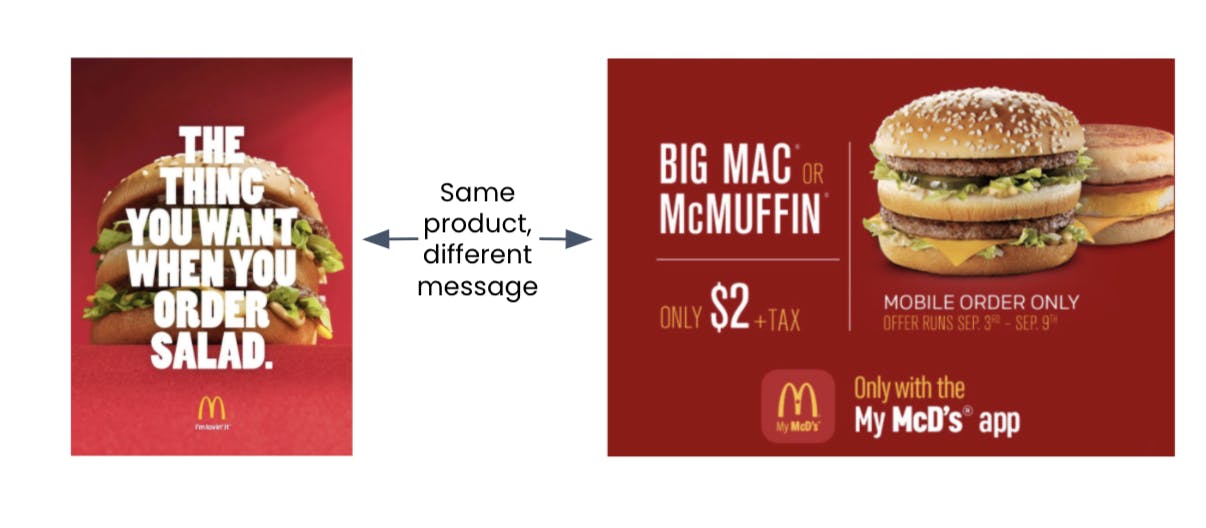

Consider the McDonald’s example below. Both ads sell the same product, but have different appeals.

So an algorithm, while highly skilled at predicting what consumers will respond to best, might still need to take into consideration the consumer’s attitude towards receiving information from different categories.

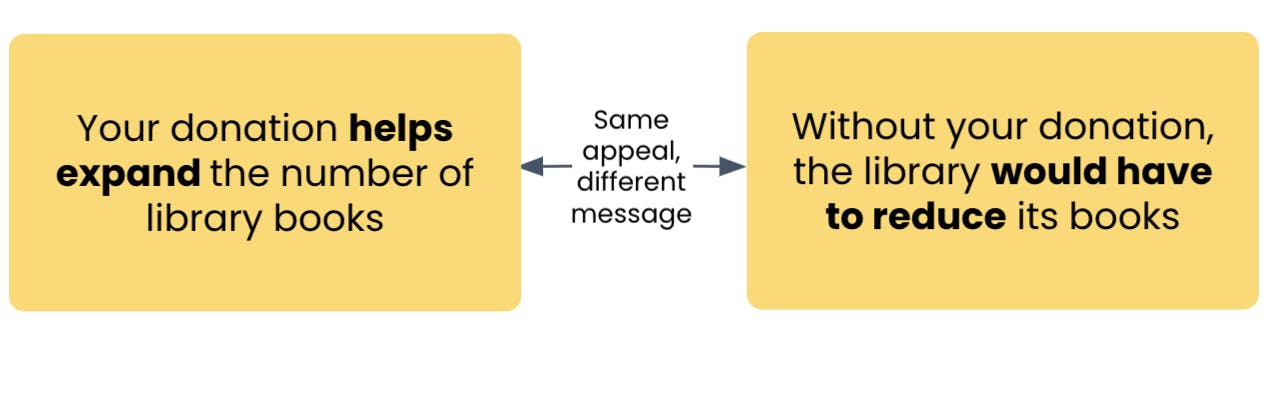

3. Goal match: Consumers approach decisions with different types of goal states, and they are looking for information that can help them achieve this goal. For example, a hedonic purchase (i.e. something you buy purely for pleasure) vs a utilitarian purchase (i.e. something you buy as a means to an end) have different goals.

Similarly, approach goals (wanting to embrace the positives) vs avoidance goals (wanting to avoid the negatives) have different requirements. An experimental study showed that donation appeals for a library framed in terms of rewards worked well for approach-oriented people, while appeals framed in terms of losses worked well for avoidance-oriented people.9 An algorithm will have to keep this in mind when deciding how to show content to a user.

4. Personality match: Many studies have shown that user psychographics are an important determinant of their behavior. Personality dimensions are measured on various scales. The most famous, the Big 5 or OCEAN personality model, is quite universal and has been adopted around the world. Spotify published a paper where they showed a clear correlation between song choices and different personality traits.10 Thus, personality traits are another thing that algorithms need to take into consideration.

As you can see, the right algorithm and the right data are just one part of the puzzle. Even if these fall into place, personalization still needs the other piece, i.e. the understanding of user psychology.

So, now that data, algorithms, and user psychology are in place, do we have a match in heaven?

The pitfalls: When does personalization fail?

Unfortunately, even after all this, personalization can fail.

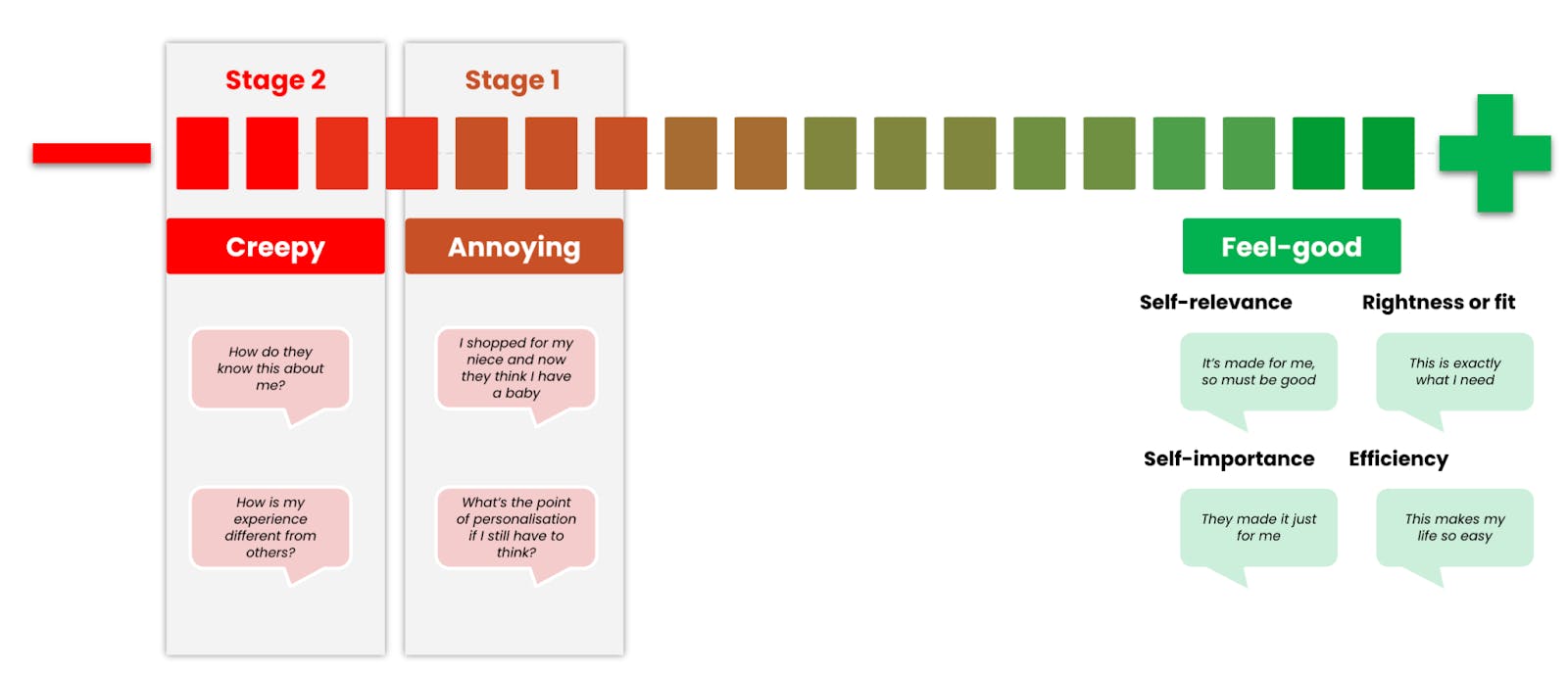

Image: The spectrum of personalization reactions

Let’s break down the failure into 2 parts.

Stage 1: Annoyance bumps

The annoyance bump is a slight bump in the journey that causes customers to question personalization. In this case, the user generally holds a positive view of personalization, but some experiences leave a sour taste in their mouth. Some of these include:

- Irrelevant personalization: When personalization segments a user into a category based on unrepresentative, one-off purchases. For example, I bought my partner a Playstation and now I’m getting ads for a bunch of video games.

- Insensitive personalization: When personalization does not take into account real-world context. For example, this past month, a photo-printing company sent out mass congratulatory “new baby” emails, not taking into account the number of women who might be going through miscarriages or fertility issues.11

- Unhelpful personalization: When, despite personalization, the cognitive load for the consumer does not go down. For example, people often complain about not being able to choose quickly on Netflix, despite the personalization.

Stage 2: The Creepiness Ditch

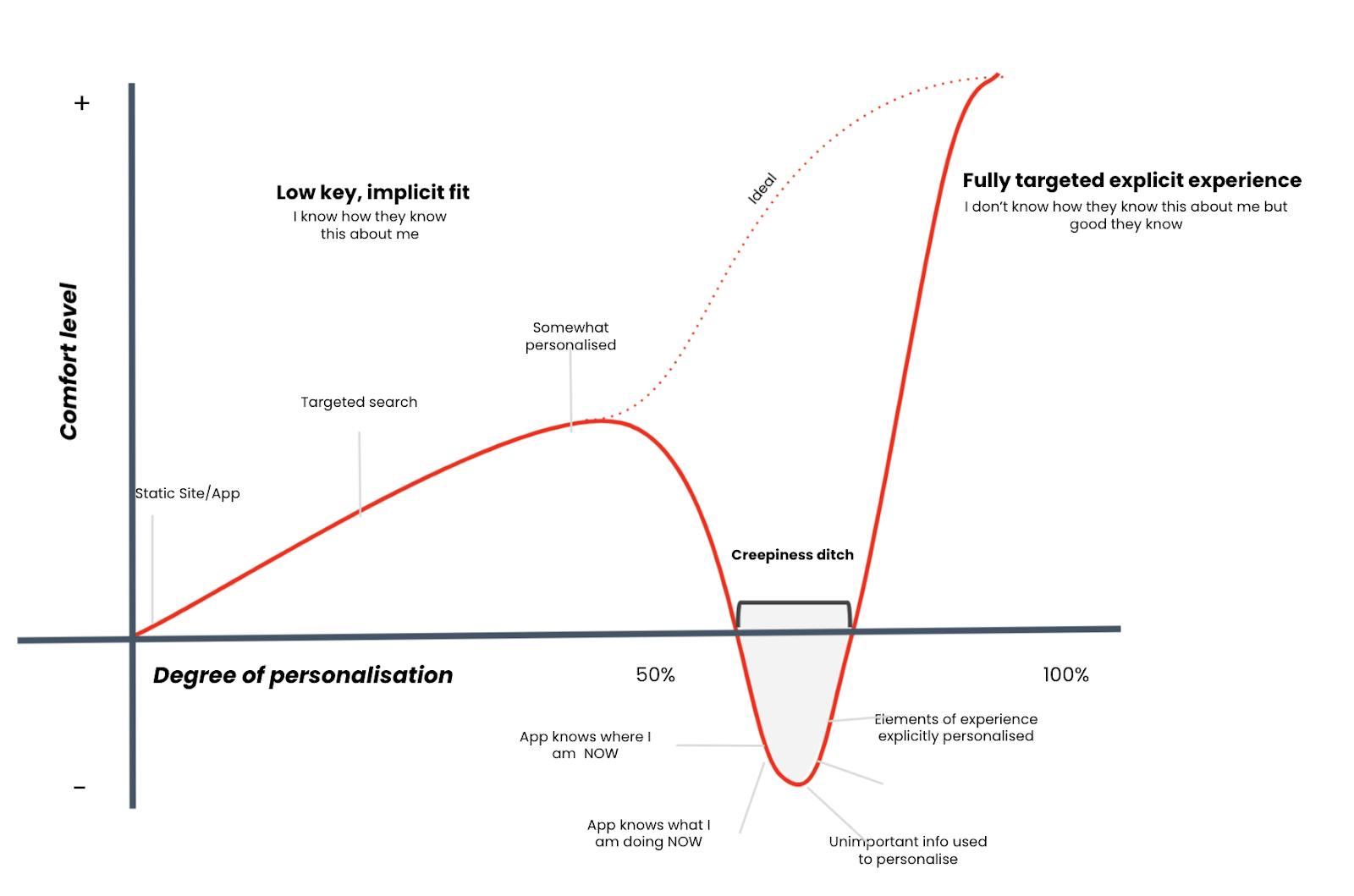

This term, coined by John Brendt, is an important twist in the personalization story.11 The creepiness ditch is the increasing discomfort people feel when a digital experience gets too personalized, but in a way that is disorienting or uncomfortable.

Image: Adapted from Personalization Mechanics by John Berndt

In the creepiness ditch lie serious offences, such as:

- Stereotyping: When messages target somebody based on a stigmatized or marginalized identity, personalization fails. In one study, when consumers believed they had received an ad for a weight loss program based on their size, they felt “unfairly judged” by the matched message.13

- Excessive Retargeting: When the same messages are shown repeatedly, it leads to reactance from consumers. 55% of consumers put off buying when they see such ads. When they see the ad 10 times, more than 30% of people report actually getting angry at the advertiser.14

- Privacy: When a message is too tailored and consumers become consciously aware of the targeting, the sense of feeling tricked can cause the match to backfire.

The creepiness ditch is important because when the customers fall into this ditch, they churn out. There are many stories of big tech companies faltering here. A few years back, Netflix was in a controversy when viewers objected to targeted posters showing a certain type of image on movie posters based on how the algorithm had identified them (including racialized identities such as “black”).15 Similarly, Amazon was called out for using algorithms that recommended anti-vaccine bestsellers and juices that purported (falsely) to cure cancer.16

Making sure personalization works

The full picture tells us that making personalization work the right way is beneficial for both users and companies.

In order to make personalization really work, design, data, and algorithms need to ensure they are auditing themselves on 5 pillars:

- Control: Are we giving users enough control over the personalization? Do users know they can control personalization? Can the user decide what data they wish to share with us?

- Feedback: Are we letting users give us feedback on our personalization? Can they tell us when something seems irrelevant to them?

- Choice: Do users have a choice to opt in to personalization? Can they choose to not be a part of the system at all?

- Transparency: Are we sharing with users why they are seeing a certain personalization? Do users know how the algorithm works?

- Ethics: Are we independently assessing our personalization outcomes on ethics? Do we have scope to engage 3rd-party assessors for such audits?17

These are just some guidelines that can help companies be mindful of the pitfalls of personalization, and ensure they steer away from the bumps and the ditches. Like all things in life, some amount of control only makes the experience better for all stakeholders.

Don’t get me wrong, I am still batting for personalization. Even as I type this, Spotify is playing for me “focused” music, which it knows makes me more productive. After finishing this article, I will go watch something on my happy Netflix account. Or maybe I will indulge myself with a thriller on my dark Netflix account. Or maybe, I will create a third account, and watch only documentaries, just to confuse the good folks at Netflix. It’s a fun game. They know it, I know it.

References

- Gomez-Uribe, C. A., & Hunt, N. (2015). The netflix recommender system: Algorithms, business value, and innovation. ACM Transactions on Management Information Systems (TMIS), 6(4), 1-19.

- Spotify Technologies, Form F1, Submitted to Securities and Exchange Commission

- www.theverge.com/2017/8/30/16222850/youtube-google-brain-algorithm-video-recommendation-personalized-feed

- MacKenzie, I., Meyer, C., & Noble, S. (2013). How retailers can keep up with consumers. McKinsey & Company, 18.

- Making It Personal: Pulse Check 2018, Accenture. Available at – https://www.accenture.com/_acnmedia/PDF-77/Accenture-Pulse-Survey.pdf

- https://marketoonist.com/2016/09/journey.html

- Berger, J., & Milkman, K. L. (2012). What makes online content viral?. Journal of marketing research, 49(2), 192-205.

- Haddock, G., Maio, G. R., Arnold, K., & Huskinson, T. (2008). Should persuasion be affective or cognitive? The moderating effects of need for affect and need for cognition. Personality and Social Psychology Bulletin, 34(6), 769-778.

- Jeong, E. S., Shi, Y., Baazova, A., Chiu, C., Nahai, A., Moons, W. G., & Taylor, S. E. (2011). The relation of approach/avoidance motivation and message framing to the effectiveness of charitable appeals. Social Influence, 6(1), 15-21.

- Research at Spotify: https://research.atspotify.com/just-the-way-you-are-music-listening-and-personality/

- https://www.forbes.com/sites/kashmirhill/2014/05/14/shutterfly-congratulates-a-bunch-of-people-without-babies-on-their-new-arrivals/?sh=6bde1841b089

- https://www.amazon.com/Personalization-Mechanics-Targeted-Content-Teams-ebook/dp/B00UKS4PYE#:~:text=Drawing%20on%20interviews%2C%20product%20evaluations,team%20implementing%20it%20to%20a

- Teeny, J. D., Siev, J. J., Briñol, P., & Petty, R. E. (2020). A review and conceptual framework for understanding personalized matching effects in persuasion. Journal of Consumer Psychology.

- https://www.inskinmedia.com/blog/infographic-environment-matters-improving-online-brand-experiences/

- https://www.theguardian.com/media/2018/oct/20/netflix-film-black-viewers-personalised-marketing-target#:~:text=But%20now%20the%20streaming%20giant,is%20targeting%20them%20by%20ethnicity.

- https://www.theguardian.com/commentisfree/2020/aug/08/amazon-algorithm-curated-misinformation-books-data

- https://www.newamerica.org/oti/reports/why-am-i-seeing-this/introduction/